Reflections on SB 1047 and Future Frontier AI Regulations

On September 29th, Gavin Newsom vetoed a proposed bill that would regulate Large Language Models. This law was proposing regulation on models trained with budgets of 100 million dollars or higher. This law was designed to prevent catastrophic harm being caused by the deployment of artifical intelligence and would enable lawsuits against companies who were perceived to be causing harm.

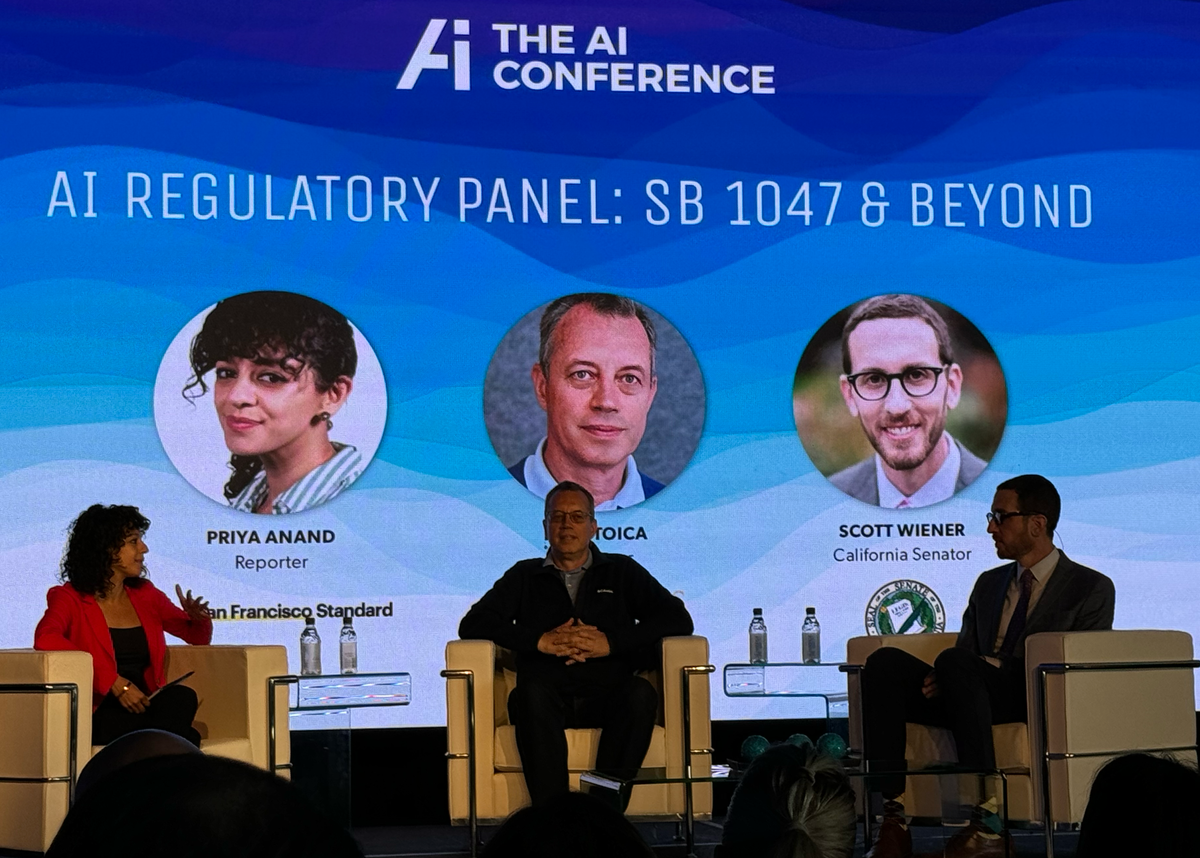

During a panel discussion at the AI Conference in San Francisco on September 11, Ion Stoica from UC Berkeley Electrical Engineering and Computer Science (EECS) and California Senator Scott Wiener debated the merits of the law. Stoica argued that the scope of the law was too arbitrary in focusing on a specific type of technology (LLMs), while other technologies (social media) remain unregulated. Stoica pointed out that there are already laws in place to prevent crimes that cause catastrophic harm and adding layers of regulation would cause AI innovation to move away from California, stalling technological progress and creating the potential for greater harm if the technology were to leave the United States. Additionally, the definition of "catastrophic harm" also created a legal construct that was far too open to interpretation and would invite a litany of lawsuits, threatening both large tech companies and early-stage startups.

It could be argued that the core technology itself could be grounds for a lawsuit due to the substantial energy used to train an LLM that could be considered harmful to the environment. The stringent and subjective nature of SB 1047 caused Newsom to return the bill without a signture, but the bill inspired ongoing questions on how to best safegaurd this emerging technology.

Does it make sense for individual states to regulate technology differently or should there be a nationwide approach and governing entity such as the U.S. AI Safety Institute? If the goal is to prevent infastructure harm, is regulation the best method? What other technology regulations are analogous? Should the certifications in electronic technology be applied in a similar fashion to AI?

A recent legal battle between the New York Times and OpenAI/Microsoft was discussed by Cecilia Ziniti, who gave a presentation at the AI Conference on AI legal isssues. The New York Times claims OpenAI has violtated its copyright by training its models on NYT content and producing answers that contain identical NYT article excerpts. The technical term for this is "regurgitation" which happens unintentionally during model hallucinations. OpenAI claims that it has grounds for "fair use" of NYT articles.

If a regulatory concern is around AI sentience and determining legal accountability there are a lot of questions to explore. How should different intelligent species regulated? Are human laws applicable to other intelligent species if they are able to pass a Turing Test or achieve human intelligence? For example, if a chimpanzee, dolphin or parrot somehow evolved to acheive human-level intelligence would the specicies or being be subject to human laws? Does this apply to technology? Would a camera or photocopier require separate trademarking laws based on their technology or does the existing law suffice? Existing copyright law places the burden on the user of the machine, rather than the technology device or manufacturer. If a technology device were to independently violate a law without a user what would be the punishment and who would be punished?

These scenarios and many others should be debated to formulate the appropriate safeguards and technology guardrails.