Empowering Healthcare Designers: Navigating AI's Emerging Frontier of Impact

A Recap from Design Wednesdays Journal Club

On October 4th, 2023, The Better Lab hosted a special edition of their Design Wednesday Journal Club, an initiative aimed at fostering discussion, critical thinking, and the exchange of ideas on health and design. For those unfamiliar, The Better Lab is a design-focused entity dedicated to improving healthcare through human-centered design. Their Journal Club offers a collaborative space for healthcare professionals, students, and innovators to critically appraise relevant literature and emerging trends within the health and design community.

This particular session featured an in-depth look at Artificial Intelligence (AI) in healthcare design and its potential implications for the healthcare system. Mariana Salvatore, the design director of The Better Lab, moderated the session, stepping in for Bella Shah during her clinical shift at Kaiser, Oakland. Mariana emphasized the importance of the Journal Club as a platform for professionals to connect, share ideas, and propose future speakers.

The featured speaker for the session was Soren DeOrlow, the founder of Resonance Partners, a San Diego-based innovation consultancy. With a rich portfolio that includes Johnson & Johnson, Stanford Medicine, Athena Health, Kenvue, and UCSF’s Department of Surgery, Soren brings a wealth of experience in harnessing emerging technologies to drive innovation in healthcare. With multiple patents and a Master’s in Integrated Design, Business, and Technology from USC, his insights into AI and human-centered design offer a unique perspective on how cutting-edge technologies can transform healthcare workflows, improve patient outcomes, and reduce clinician burden.

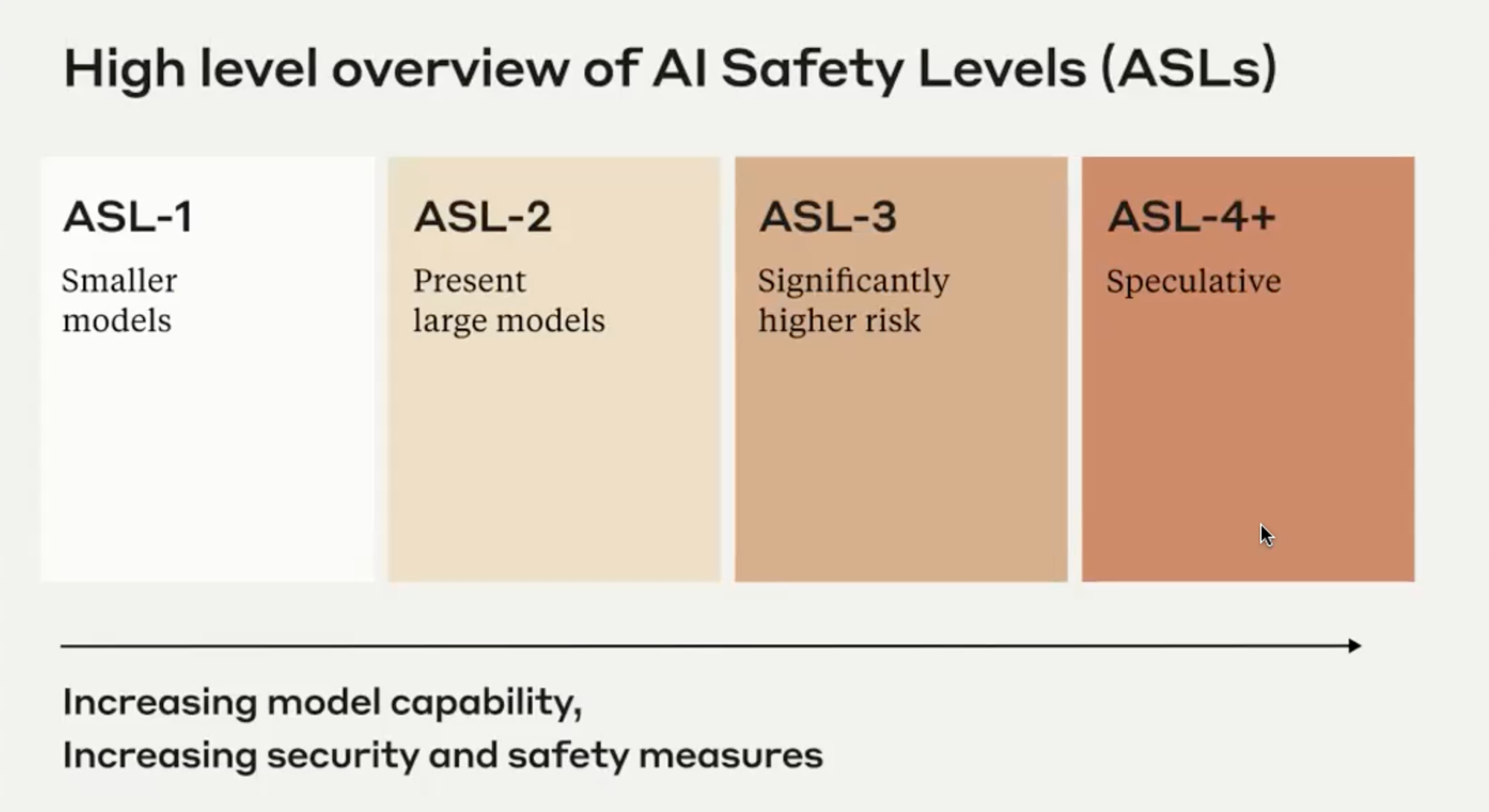

This session of the Journal Club began with a review of two articles that set the stage for a deep dive into AI. The first article from Stanford Medicine's Scope Blog, featured an interview with Dr. Chen discussing the promises and pitfalls of AI in healthcare, particularly the automation of administrative tasks and the risks posed by current AI systems that may generate inaccurate information. The second article from Anthropic, expanded on AI safety, exploring the challenge of building reliable and ethically steerable systems that will integrate seamlessly into all areas of society.

The conversation that followed, led by Soren, explored a wide range of critical topics in AI. He addressed ethical considerations, AI as a general-purpose technology, and foundational concepts like transformers and attention mechanisms that are advancing natural language processing (NLP). Soren also covered applications such as named entity recognition, computer vision, and the evolving role of large language models (LLMs) in enhancing performance, including through retrieval-augmented generation (RAG). Additionally, he highlighted the exciting potential of future multi-modal capabilities and autonomous agents, framing AI not just as a tool for human mimicry but as an exponential technology with vast implications. The discussion underscored the need for careful integration, balancing innovation with reliability, safety, and ethical responsibility.

The video and presentation from this Design Wednesdays Journal Club can be found here: https://ucsf.app.box.com/s/a0k068s9xn2fknfqtjnevj3egshs1pqh

Presentation: https://colab.research.google.com/drive/156zhAN78FIOoprSoQcpwK0ZNnbz7bpCG#scrollTo=IDn2a4_u8hyx

Four Recommendations for Navigating AI's Emerging Frontier of Impact

Recommendation 1:

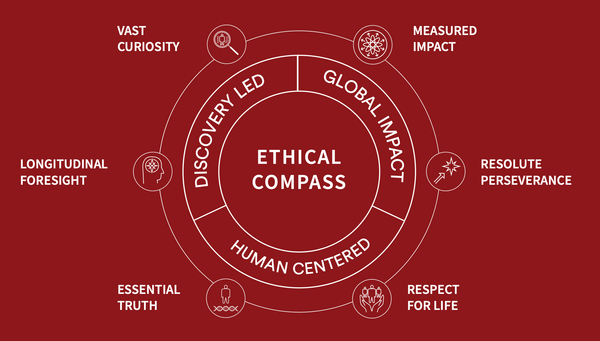

Prioritize Ethical Considerations and Responsible Applications of AI that leverage high quality data and technology.

"AI should act with care & humility." — Ben Mann, Co-founder Anthropic

With any application of AI it is important to consider unintended consequences that might occur. There are numerous thought leaders who are leading the discussion around the ethics of AI. Anthropic is one of them. They have taken a robust approach to AI ethics and applied human-centered values in the way they are building their LLM, Claude. Claude is built with "Constitutional AI" prioritizing ethical principles, embracing personal privacy, respect and avoidance of toxicity, by focusing on principles of care, honesty and avoiding harm. Healthcare innovators should draw inspiration from Anthropic when developing with exponential technologies.

There are numerous thought leaders in ethical and unbiased AI, below are several who should be considered recommended reading.

Stanford HAI Ethics & Artificial Intelligence https://hai.stanford.edu/ethics-and-artificial-intelligence

Kristian Simsarian - TedX Cincinnati "Are We Being The Parents AI Needs Us To Be?" https://www.youtube.com/watch?v=-h8Y3ugyihE

Chip Huyen — Designing Machine Learning Systems / Model Cards - nutritional facts for ML models https://www.oreilly.com/library/view/designing-machine-learning/9781098107956/

Dr. Kalinda Ukanwa — Algorithmic Bias in Service https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3654943

Dr. Joy Boulamwini — Coded Bias / Unmasking AI https://www.penguinrandomhouse.com/books/670356/unmasking-ai-by-joy-buolamwini/

Recommendation 2:

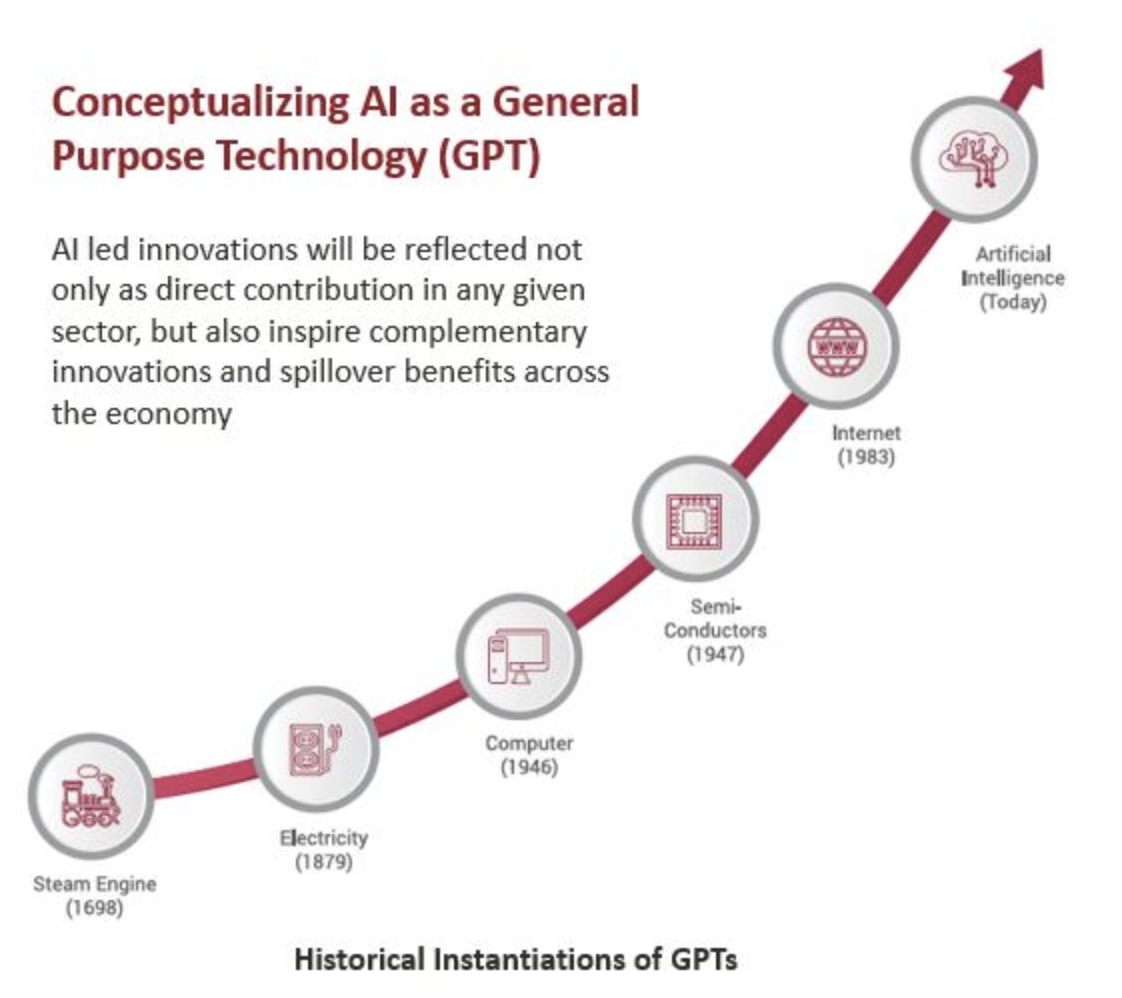

Understand that AI will become a general-purpose technology similar to the internet, computers and electricity which will provide great potential to improve the landscape of healthcare in the future.

One of the most compelling points to be made on this emerging domain is the importance of reframing AI as a "general-purpose technology" rather than focusing solely on its intelligence. Much like electricity or the internet, AI is a foundational technology that can be applied across industries, driving innovation and efficiency. For healthcare, this could mean everything from reducing administrative burdens (like filling out electronic medical records) to improving diagnostic accuracy and patient outcomes.

AI's potential economic impact is substantial, with some estimates predicting a global boost of 7% in productivity due to AI adoption. However, it is important to caution that while the technology is advancing rapidly, healthcare must proceed with caution to avoid repeating the AI winters of the past, where hype led to disappointment.

Recommendation 3:

AI's development began with attempts to mimic the human thought process, but it is better to think of modern AI as exponential technology that can contribute to a functional task or connect siloes of data.

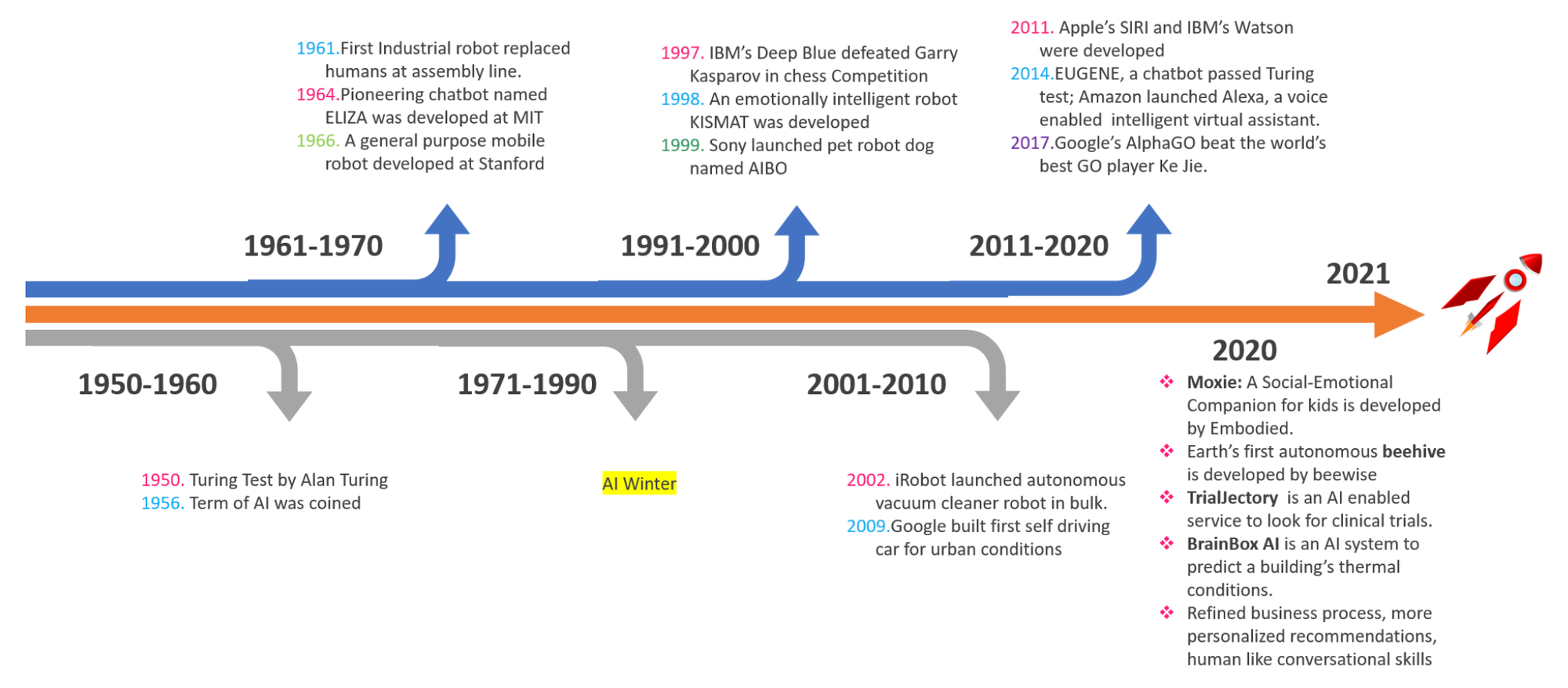

For many decades, the Turing Test, established by Alan Turing created a benchmark that all artificial intelligence endeavors should strive to re-create human intelligence. This goal still exists as companies like OpenAI seek to achieve artificial general intelligence or "AGI." One of the key shifts in AI, or rather "exponential technology," is not just about replacing human intelligence—it’s about augmenting it. "Narrow" applications of AI focus on specific tasks that can be implemented with strict parameters and perform great utility.

In healthcare, narrow AI can help clinicians work more efficiently by automating routine tasks, allowing them to focus on patient care. The combination of human expertise and AI’s computational power presents an exciting opportunity to transform the healthcare landscape by augmenting care delivery and streamlining administrative tasks.

Recommendation 4:

Develop a curiosity for the key technology components of modern AI and harness them for impact.

Below is a brief summary of key technology components or "building blocks" within the field of AI (note that numerous underlying technologies contribute to each component but are not explicitly listed below for brevity).

Natural Language Processing

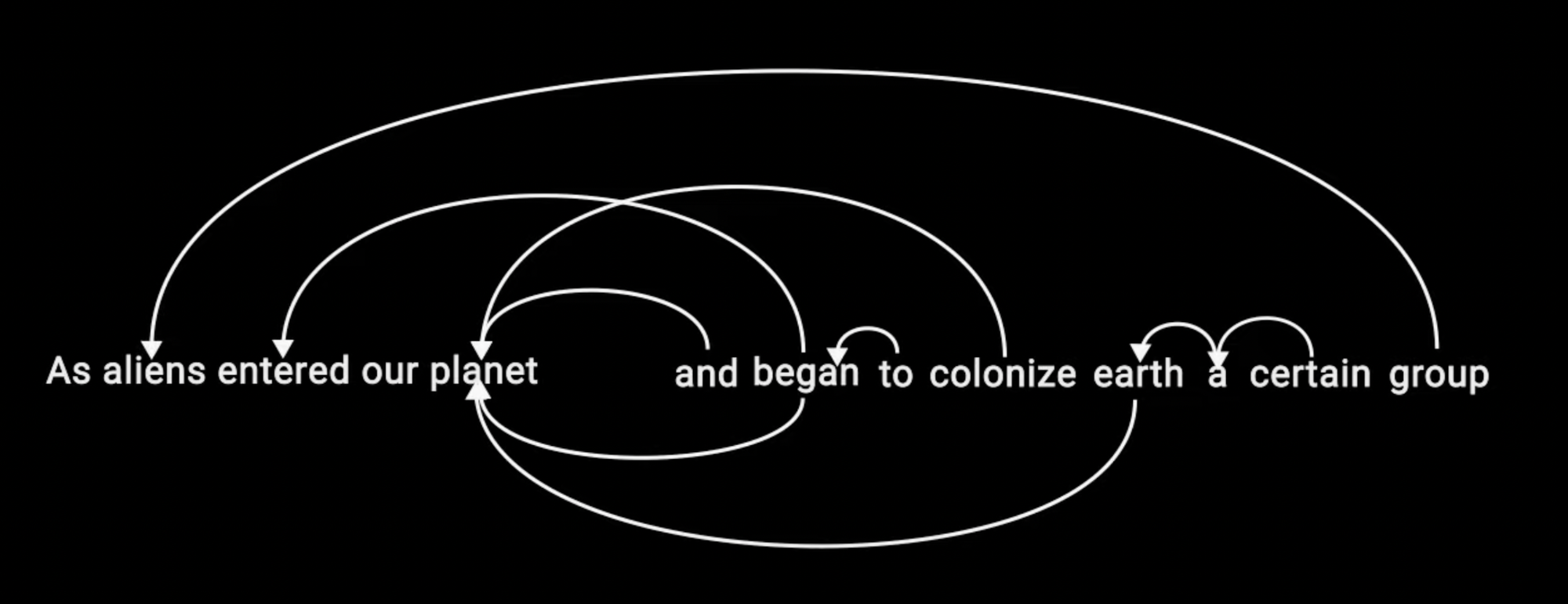

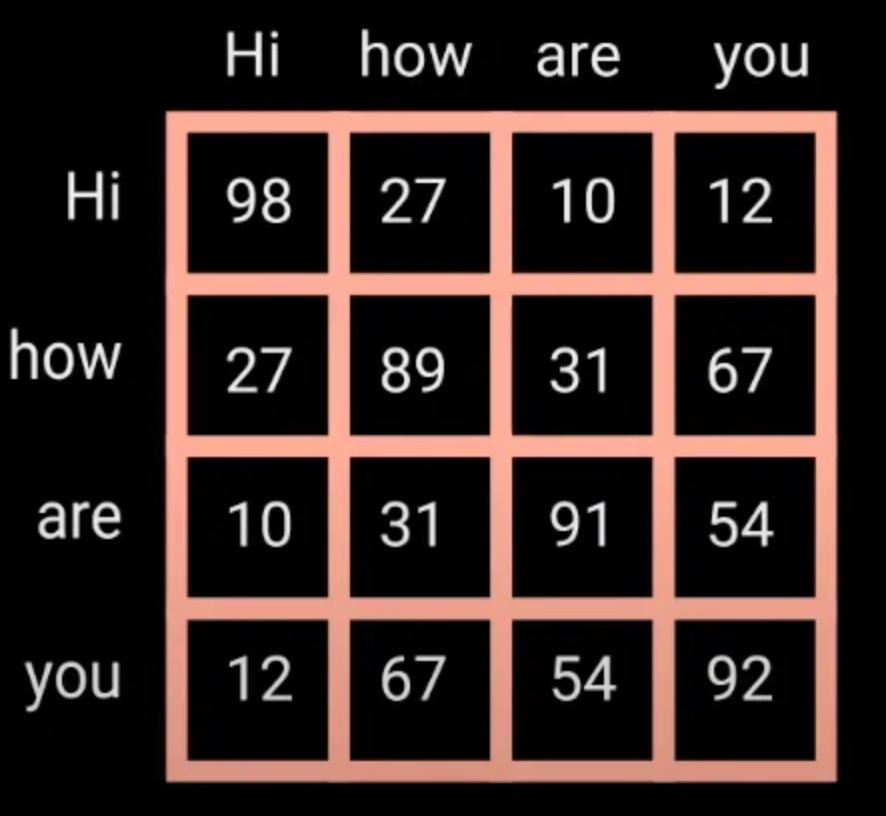

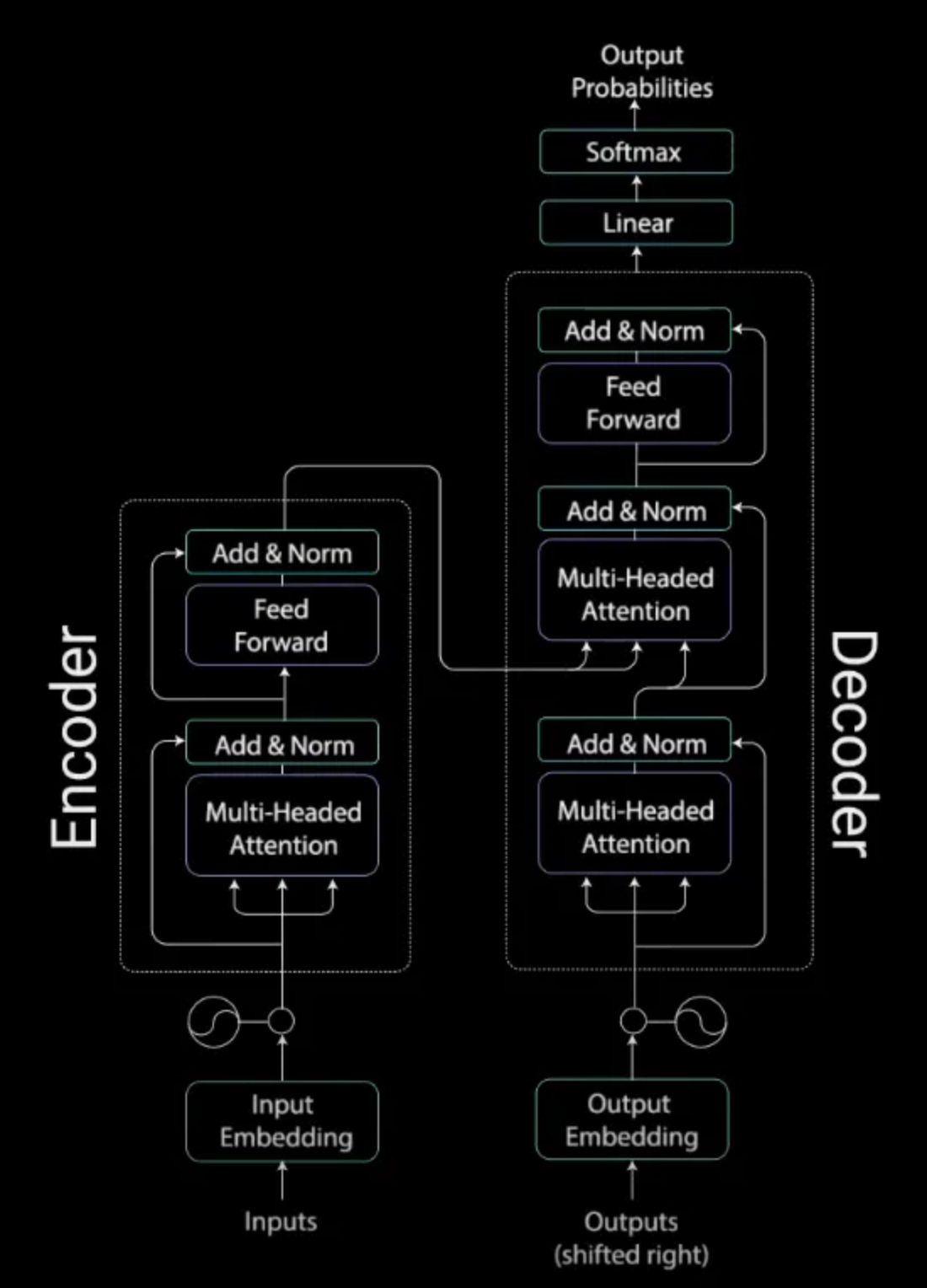

The first component to explore, is natural language processing (NLP). NLP is a sub-field of computer science and artificial intelligence focused on enabling computers to understand human language and ultimately communicate. NLP has helped computers to process bodies of text for a variety of purposes such as spell checking, language translation, summarization and sentiment analysis, but the capabilities are rapidly advancing. In 2017 a seminal paper was published, "Attention is all you need" which dramatically changed the landscape of NLP and was the precursor to large language models. This paper presented the concept of an attention mechanism within a transformer architecture, generating the probability of a word occurring within a sequence of words and ultimately enabling a computer to create conversational text.

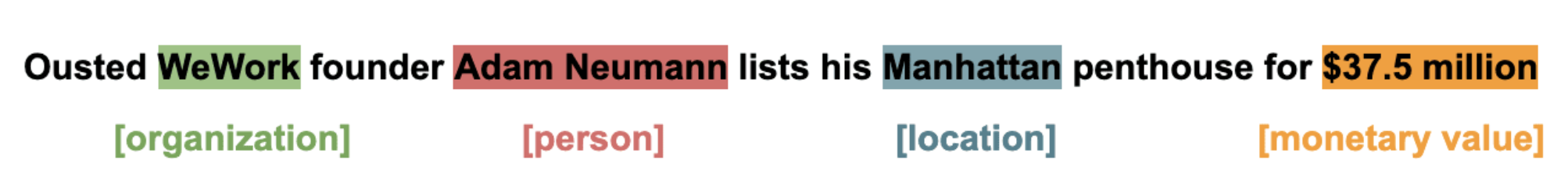

Named Entity Recognition (NER) is a subset of NLP that helps computers to understand language by classifying words that have a hierarchical relationship to one another.

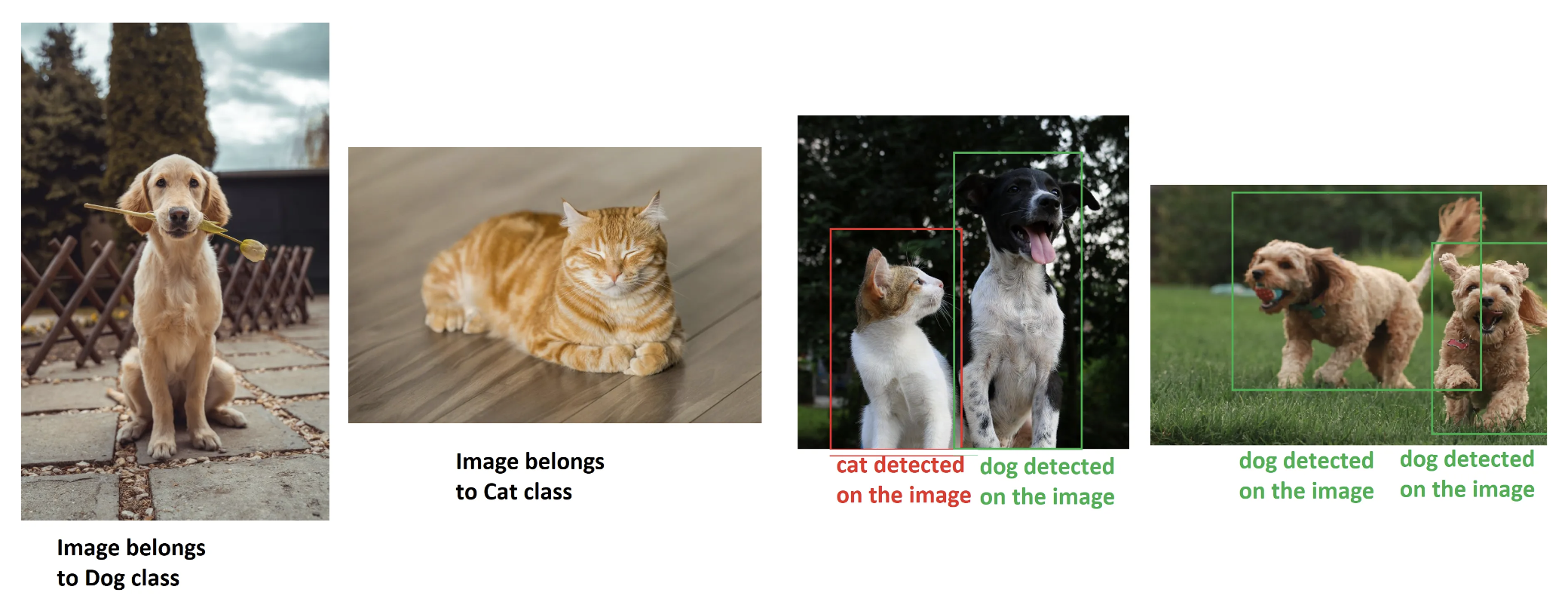

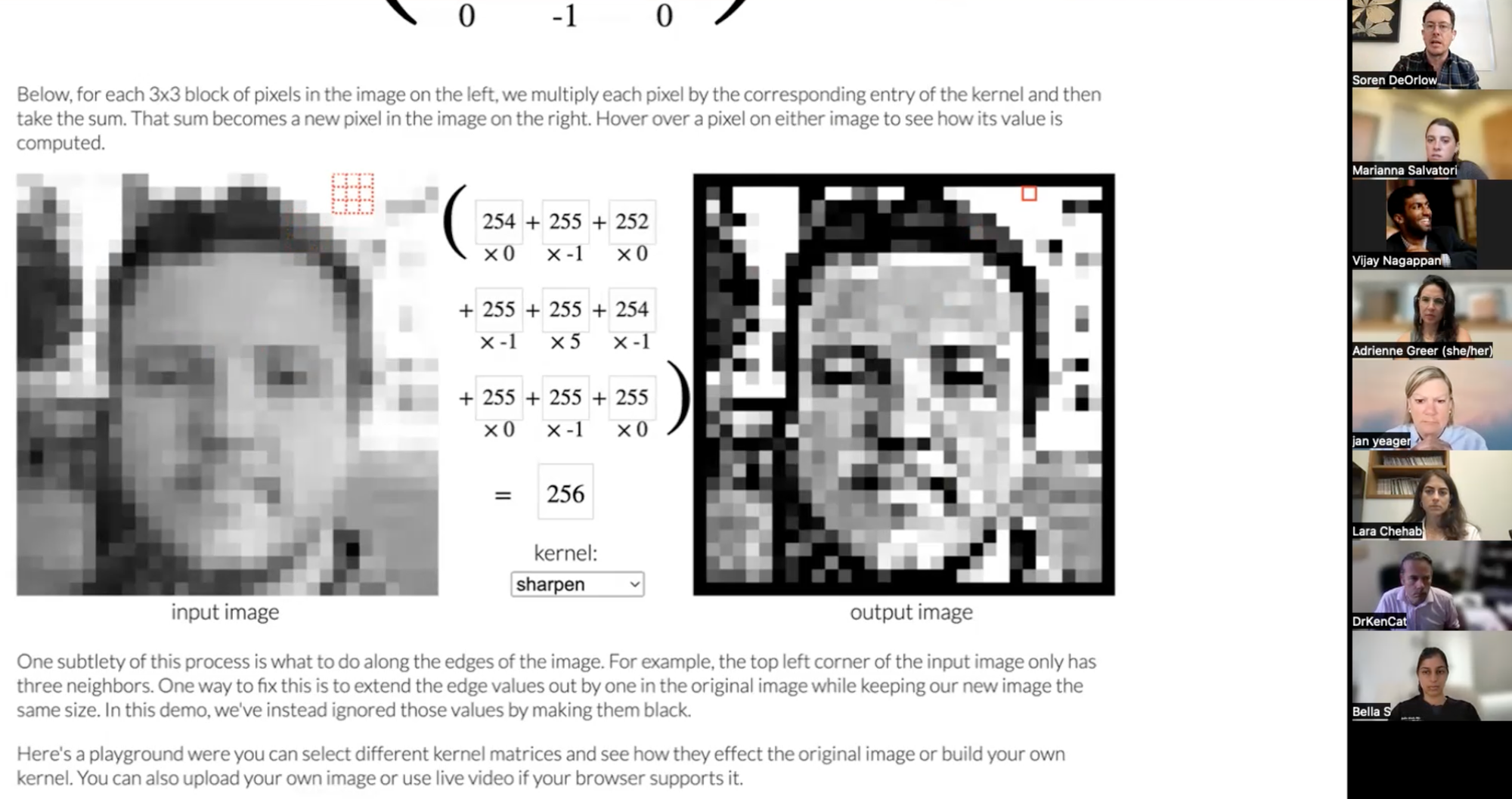

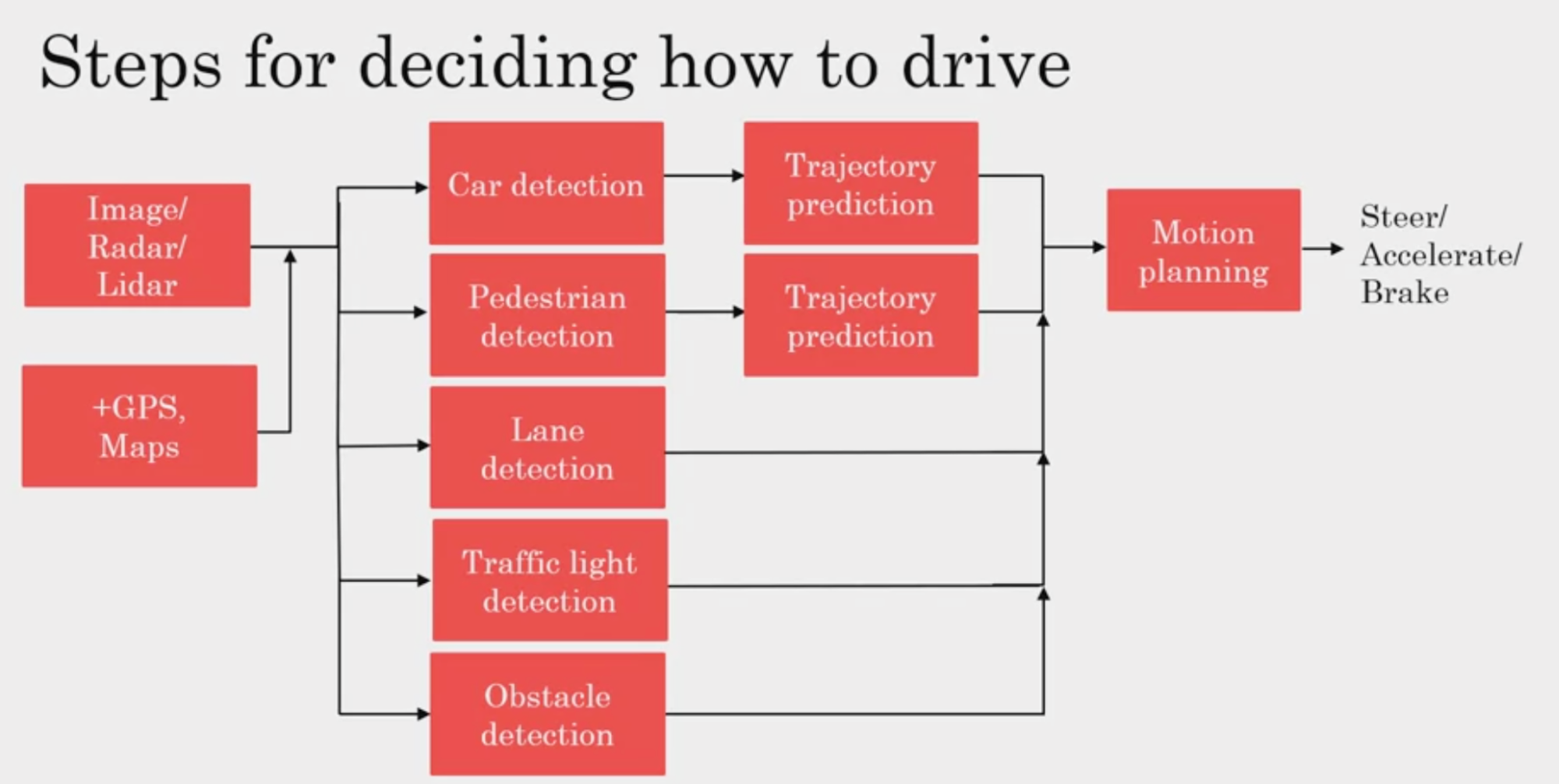

Computer Vision Uses a type of neural network called a convolutional neural network (CNN) to teach computers information through digital images. CNNs identify frequently occurring patterns of pixels, conglomerated together to form a class that can be referenced across images. The pattern of pixels are processed within an image kernel grid where each pixel is represented as a number and later recognized as a vector group. When trained on billions of images, pixel weights are calculated across trillions of image kernels and the image classification is recognized in each image. The power of computer vision has shown great promise in radiology where in some cases, computers have been able to outperform human experts.

AI Autonomous Agents (e.g., Roomba) are engineered to anticipate and perceive an environment around them. They are comprised of percepts which initiate a percept sequence. Advanced agents are able to predict events and respond appropriately. A Roomba vacuum is continuously perceiving the environment around it, tracking areas (features) such as open floor versus walls or furniture and responding to each environment through a percept sequence (if wall is detected, turn Roomba).

Generative AI

A significant breakthough in NLP is the transformer and attention mechanism technology that emerged in 2017, advancing the field of NLP substantially and paving the way for Generative AI. Now, the landscape of generative AI is continually growing and evolving in new ways and below is a list of popular models that rely on this technology.

- Generative AI Text Generation- Large Language Models (OpenAI's GPT, Meta's Llama, Google's Bard, Anthropic's Claude 2)

- Generative AI Image Generation - Generative Adversarial Networks (GANs) (Stable diffusion, DALL-E, Midjourney)

- Medical/Scientific Generative AI (Med-PaLM, Alphafold)

Revisiting The Transformer Architecture

The transformer architecture generates an environment where the input content receives an awareness of what content has been tokenized, the order of content, and a contextual embedding of that content which ultimately drives an output. Scaling an LLM model creates a substantial tail of tasks which are understood by the model. Scaling increases the probability of a desired output occuring and drives overall accuracy.

Retrieval Augmented Generation (RAG) RAG is a way to improve the factual quality of LLMs without retraining them. LLMs are able to utilize RAG to search private data repositories to customize the way an LLM will function.

Startups are exploring how to combine all of the various exponential technologies to create bespoke multi-modal AI agents.

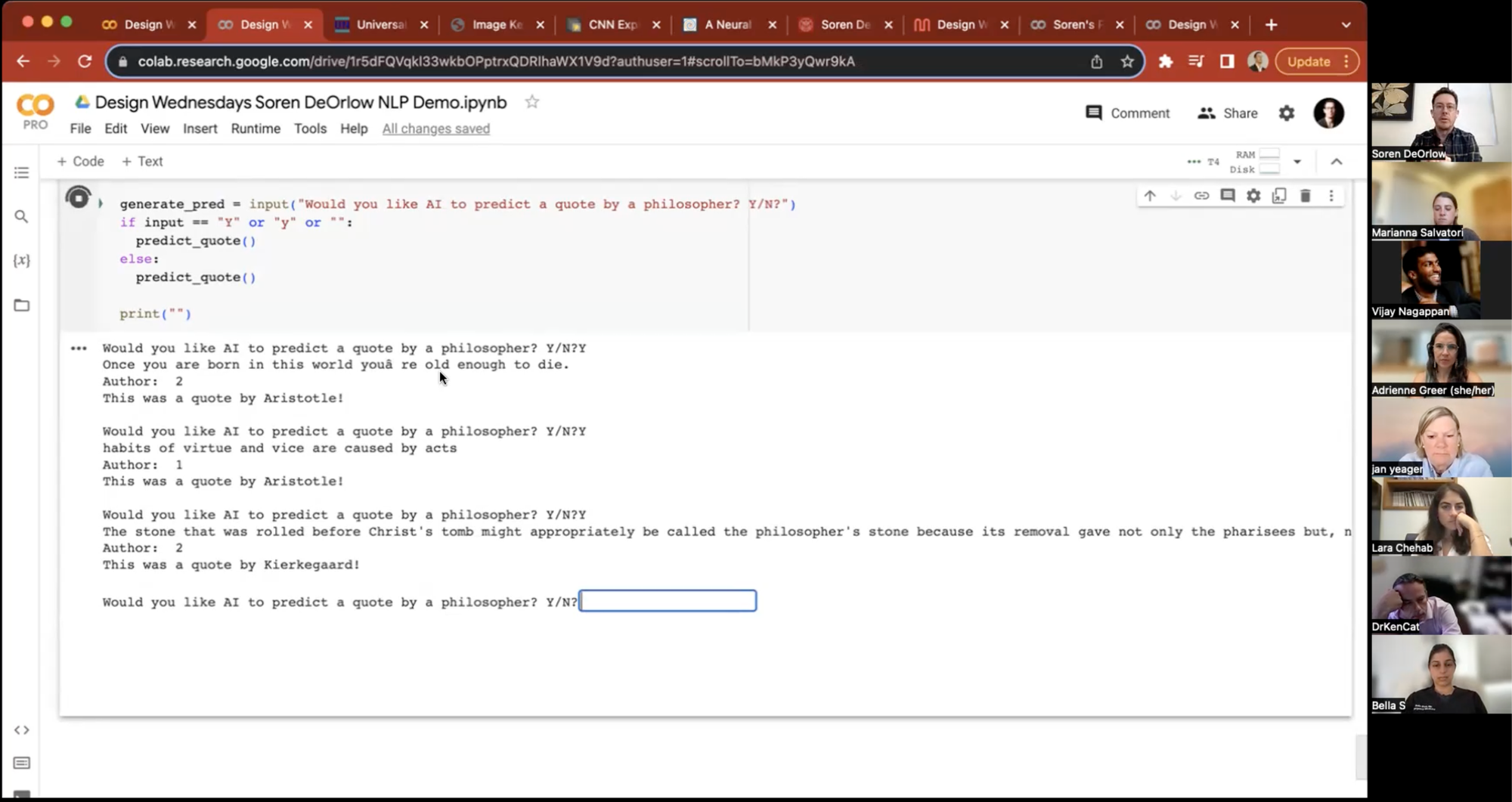

NLP Demo: https://colab.research.google.com/drive/1r5dFQVqkl33wkbOPptrxQDRlhaWX1V9d#scrollTo=bMkP3yQwr9kA